Abstract: The wide adoption of data-intensive algorithms to tackle today’s computational problems introduces new challenges in designing efficient computing systems to support these applications. In critical domains such as machine learning and graph processing, data movement remains a major performance and energy bottleneck. As repeated memory accesses to off-chip DRAM impose an overwhelming energy cost, we need to rethink the way embedded (i.e., on-chip) memory systems are built in order to increase storage density and energy efficiency beyond what is currently possible with SRAM. To address these challenges and empower future memory system design, we developed NVMExplorer: a design space exploration framework that addresses key cross-computing-stack design questions and reveals opportunities and optimizations for embedded NVMs under realistic system-level constraints, while providing a flexible interface and modular evaluation to empower further investigations.

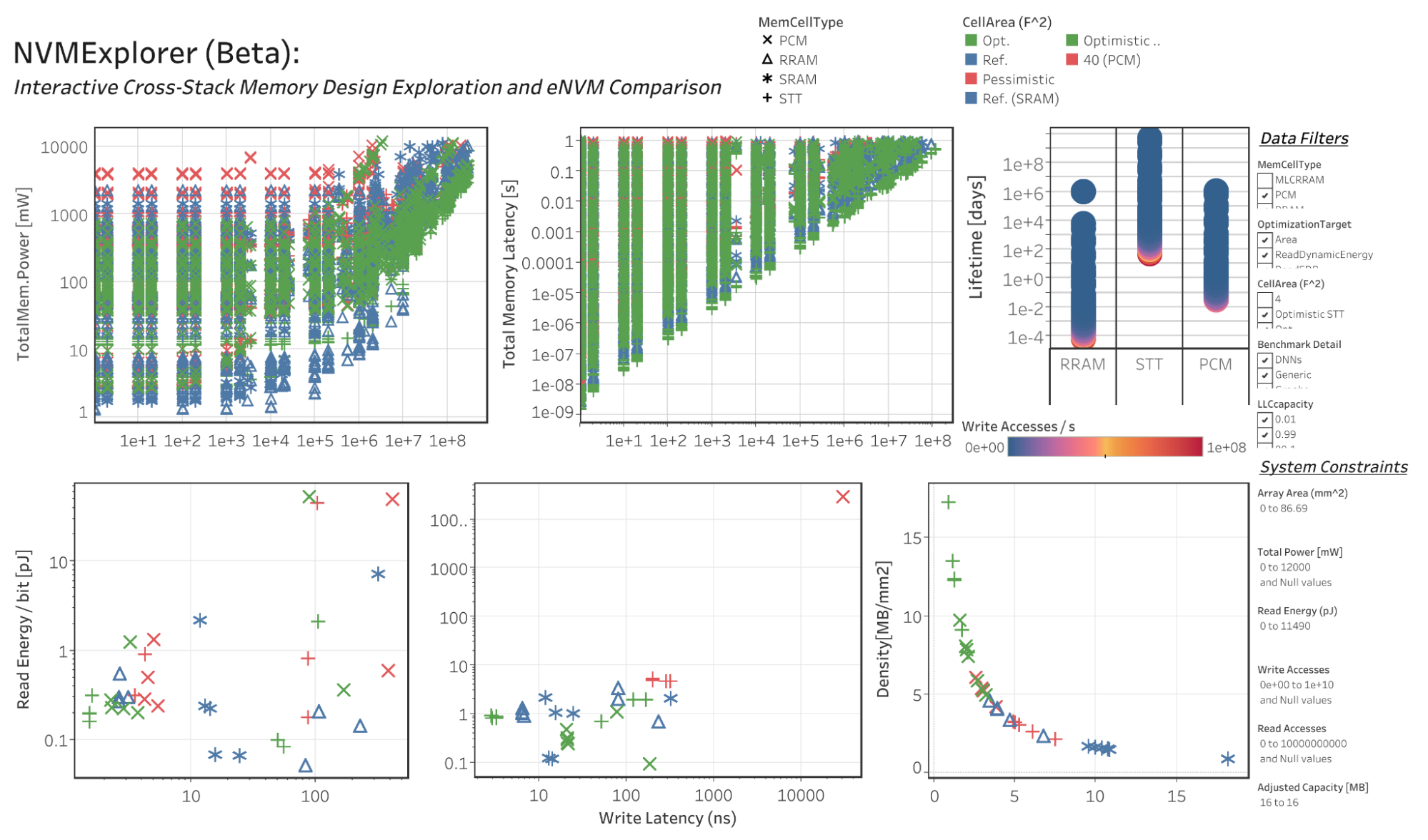

This tutorial provides a straightforward approach for comparing embedded memory solutions (including a broad selection of eNVM proposals) based on practical use cases, in addition to empowering users to augment and evaluate alternative application, system, and memory device properties and constraints. This tutorial will describe and walk through hands-on design studies using our open-source code base (NVMExplorer [1] (http://nvmexplorer.seas.harvard.edu/)), drawing on previously proposed eNVM-based architectures [2], [3]. This tutorial will additionally provide a database of eNVM characteristics from recent literature (122 surveyed ISSCC, IEDM, and VLSI publications), provide specific “tentpole” technology configurations to summarize limits and trends, give instruction for how to use our interactive data visualization dashboard (shown in the graphic below), and walkthrough fault modeling and fault injection studies. We will additionally guide attendees to configure their own design studies and explore the most recent expansions and features of the NVMExplorer framework.

Website: http://nvmexplorer.seas.harvard.edu/

Open-Source Project: https://github.com/lpentecost/NVMExplorer

Companion Paper: L. Pentecost*, A. Hankin*, M. Donato, M. Hempstead, G. Y. Wei, and D. Brooks (*joint first authors), “NVMExplorer: A Framework for Cross-Stack Comparisons of Embedded Non-Volatile Memory Solutions.” The 28th IEEE International Symposium on High-Performance Computer Architecture (HPCA-28). 2022.

Half-Day Tutorial Outline:

NVMExplorer: A Framework for Cross-Stack Comparisons of Embedded Non-Volatile Memories

- “Introduction”, Lillian Pentecost

- “Basic Usage: Configuration Files and Inputs”, Alexander Hankin

- “DNN Accelerator Case Study: Which eNVM is most compelling?”, Lillian Pentecost

- “Customizing Memory Cell Parameters for Co-Design”, Alexander Hankin

- “Fault Modeling and Fault Injection”, Lillian Pentecost

- “Navigating the web-based visualization tool”, Alexander Hankin

- “Conclusion; Configuring your own design study”, Lillian Pentecost

Organizers and Affiliations:

-

Lillian Pentecost, Harvard University: Lillian Pentecost is a final-year Ph.D. candidate in Computer Science at Harvard University advised by Professors David Brooks and Gu-Yeon Wei. Her research enables denser and more efficient future memory systems by exposing and combining design choices in emerging memory devices with architectural support and the demands of critical, data-intensive applications like machine learning. Lillian holds a B.A. in Physics and Computer Science from Colgate University and a S.M. in Computer Science from Harvard University, and she has completed research internships at IBM, Microsoft, and NVIDIA.

-

Alexander Hankin, Tufts University: Alexander Hankin is a final-year PhD student in the Electrical and Computer Engineering Department at Tufts University. His research interests include embedded non-volatile memories, thermal hotspots, and ion-trap quantum architectures. He received the BS and MS degrees in Computer Engineering and Electrical Engineering, respectively, at Tufts, and he has completed research internships at Google and Intel.

-

Marco Donato, Tufts University: Marco Donato is an assistant professor in the Department of Electrical and Computer Engineering at Tufts University. Prior to joining Tufts, he was a postdoctoral fellow in the John A. Paulson School of Engineering and Applied Sciences at Harvard University. He earned a PhD in Electrical Sciences and Computer Engineering from Brown University and holds a MSc and BSc degree from the University of La Sapienza in Rome, Italy. His research focuses on designing reliable and energy efficient hardware leveraging emerging technologies. He is currently working on co-design methodologies for building specialized architectures for machine learning applications that leverage dense, fault-prone embedded non-volatile memories.

-

Mark Hempstead, Tufts University: Mark Hempstead is an Associate Professor at Tufts University. His research has been applied to a range of platforms from chip multiprocessors, high performance computing, machine learning systems, embedded systems, and IoT. Dr. Hempstead received a BS in Computer Engineering from Tufts University and his MS and Ph.D. in Engineering from Harvard University. Prior to joining Tufts University in 2015, he was an Assistant Professor at Drexel University. He received the NSF CAREER award in 2014.

-

Gu-Yeon Wei, Harvard University: Gu-Yeon Wei is Robert and Suzanne Case Professor of Electrical Engineering and Computer Science in the Paulson School of Engineering and Applied Sciences (SEAS) at Harvard University. He received his BS, MS, and PhD degrees in Electrical Engineering from Stanford University. His research interests span multiple layers of a computing system: mixed-signal integrated circuits, computer architecture, and design tools for efficient hardware. His research efforts focus on identifying synergistic opportunities across these layers to develop energy-efficient solutions for a broad range of systems from flapping-wing microrobots to machine learning hardware for IoT devices to large-scale servers.

-

David Brooks, Harvard University: David Brooks is the Haley Family Professor of Computer Science in the School of Engineering and Applied Sciences at Harvard University. Prior to joining Harvard, he was a research staff member at IBM T.J. Watson Research Center. Prof. Brooks received his BS in Electrical Engineering at the University of Southern California and MA and PhD degrees in Electrical Engineering at Princeton University. His research interests include resilient and power-efficient computer hardware and software design for high-performance and embedded systems. Prof. Brooks is a Fellow of the IEEE and has received several honors and awards including the ACM Maurice Wilkes Award and ISCA Influential Paper Award.

References:

[1] L. Pentecost*, A. Hankin*, M. Donato, M. Hempstead, G. Y. Wei, and D. Brooks (*joint first authors), “NVMExplorer: A Framework for Cross-Stack Comparisons of Embedded Non-Volatile Memory Solutions.” The 28th IEEE International Symposium on High-Performance Computer Architecture (HPCA-28). 2022.

[2] A. Hankin, T. Shapira, K. Sangaiah, M. Lui, and M. Hempstead, “Evaluation of non-volatile memory based last level cache given modern use case behavior,” in IEEE International Symposium on Workload Characterization (IISWC), 2019.

[3] L. Pentecost, M. Donato, B. Reagen, U. Gupta, S. Ma, G.-Y. Wei, and D. Brooks, “MaxNVM: Maximizing DNN storage density and inference efficiency with sparse encoding and error mitigation,” in Proceedings of the 52nd International Symposium on Microarchitecture, 2019.